Where We've Been

We give both commissioned and independent presentations on topics that we hope will be of use to the broader evaluation and nonprofit field. Previous conference presentations and speaking engagements are listed below.

Real Time Evaluation: Tips, Tools, and Tricks of the Trade (Wednesday, November 11)

How can an evaluator meaningfully convey findings to stakeholders based on data collected that same day? How can real time evaluation really be done in real time? This Ignite talk was based on Innovation Network’s experiences with facilitating real time evaluation in health policy settings, and introduced AEA 2015 participants to three tools that can be used as part of any evaluator’s real time evaluation toolbox: surveys, H-forms, and timelines. In this session, Yuqi Wang provided an overview of each tool; showed how these tools can aid data collection, analysis, and communication of findings in real time; and shared lessons learned from Innovation Network’s experiences with these three tools during the evaluation process.

Slides | Handout

Putting Data in Context: Timelining for Evaluators (Friday, November 13)

Creating a timeline is a method for picturing or seeing events as they take place over time. By documenting major occurrences in chronological order, evaluators are able to identify patterns, themes, or trends that they may not have seen otherwise. A timeline allows evaluators to “zoom out” and look at the broader landscape, so that they are better positioned to think through and understand the context in which events occur. Having a timeline is especially useful for complex, multi-year evaluation projects with several threads of evaluation, where documenting the process is just as important as measuring the outcome itself. Creating a timeline has three key components: planning, populating, and revising. In this session, Kat Athanasiades, Smriti Bajracharya and Katherine Haugh demonstrated how to incorporate a timeline into a report, how to use a timeline to track progress internally, and how to utilize data visualization principles to create a visual timeline.

Slides | Handout

Data Placemats: Practical Design Tips (Friday, November 13)

Increasing stakeholder involvement throughout the evaluation lifecycle, not only enhances stakeholder buy-in to the final evaluation results, but it also ensures that the evaluator is taking into consideration multiple viewpoints to be able to provide a more comprehensive picture of a program or initiative. Data placemats, a data viz technique to improve stakeholder understanding of data, can be used to communicate preliminary evaluation results during the analysis phase of the evaluation life cycle. When done correctly, it offers stakeholders an opportunity to form their own judgments about the data and weigh in prior to the final report. In this session, Veena Pankaj reviewed the concept of data placemats, focusing specifically on the nuts and bolts of constructing a data placemat.

Slides

Success and Failure in the Evaluation Process (Tuesday, March 17)

As What do the terms “success” and “failure” really mean in the philanthropic world? Funders have taken different approaches to learning from initiatives that haven’t gone quite as they had hoped. Some funders want to learn from their mistakes, some provide technical assistance to lagging grantees, and some want to focus their light on “bright spots” and grantee successes. In this session, Kat Athanasiades from Innovation Network will discuss how and when her organization uses grant reports in evaluation; how and why getting good evaluation data from grant reports is difficult; and potential ways to make it easier for grantees to report on failure in a way that could be useful to evaluators.

Slides

Data Placemats: A DataViz Technique to Improve Stakeholder Understanding of Evaluation Results, AEA Coffee Break Webinar (Friday, January 23)

As evaluators we understand the importance of participatory evaluation and its connection to evaluation use. A data placemat is a tool that can be used to communicate preliminary evaluation results before writing the final report. They are usually used in the analysis phase of the evaluation lifecycle and offer stakeholders an opportunity to form their own judgments about the data and weigh in, prior to the writing of the final report. This coffee break webinar will provide an overview of the different steps involved in creating and implementing data placemats.

Slides from a similar presentation

Do-It-Yourself Logic Models: Examples, Templates, Checklists, and More (Thursday, November 20)

Logic models are nonprofit road maps: they help you diagram where you are now and where you hope to be in the future. They are used for program planning, program management, fundraising, communications, consensus-building, and evaluation planning. Want to make a logic model, but not sure where to start?

In this 90-minute webinar, Veena Pankaj and Kat Athanasiades taught the nuts and bolts of logic models--what they are, how to make them, who should be involved in the process, and how often to update them. They provided tools including a logic model template, free online logic model builder, and a logic model checklist. They also shared several examples from real nonprofits so that you're ready to hit the ground running.

Slides from a similar presentation | Logic model builder | Logic model workbook

Data Placemats: A DataViz Technique to Improve Stakeholder Understanding of Evaluation Results (Thursday, October 16)

.png) Data placemats are used to communicate preliminary evaluation results to stakeholders prior to writing the final report. Data placemats offer stakeholders an opportunity to weigh their own judgments about the data and share their thoughts prior to the final report. In this session, Veena Pankaj will describe various ways to improve stakeholder engagement, as well as ways to increase stakeholder understanding of evaluation results. Data placemats are used to communicate preliminary evaluation results to stakeholders prior to writing the final report. Data placemats offer stakeholders an opportunity to weigh their own judgments about the data and share their thoughts prior to the final report. In this session, Veena Pankaj will describe various ways to improve stakeholder engagement, as well as ways to increase stakeholder understanding of evaluation results.

- Date: Thursday, October 16, 2014

- Time: 1:00-1:45 PM

- Location: Room 104, Hyatt Regency Denver at Colorado Convention Center (650 15th St, Denver, CO 80202)

- Session Number: 517

Conference Program

Make your Data Count: New, Visual Approaches to Evaluation Reporting (Friday, October 17)

Charts and graphs built on dataviz principles are transforming evaluation reporting and increasing the evaluator's communication power. In this session, Johanna Morariu, Kat Athanasiades, Ann K. Emery and Veena Pankaj will provide principles and case studies of new, visual approaches to evaluation reporting. The session will provide examples, such as incorporating a wealth of visuals in text reports, using large format hand-outs to emphasize findings, and designing slide reports. Charts and graphs built on dataviz principles are transforming evaluation reporting and increasing the evaluator's communication power. In this session, Johanna Morariu, Kat Athanasiades, Ann K. Emery and Veena Pankaj will provide principles and case studies of new, visual approaches to evaluation reporting. The session will provide examples, such as incorporating a wealth of visuals in text reports, using large format hand-outs to emphasize findings, and designing slide reports.

- Date: Friday, October 17, 2014

- Time: 4:30 - 5:15 PM

- Location: Room 105, Hyatt Regency Denver at Colorado Convention Center (650 15th St, Denver, CO 80202)

- Session Number: 1223

Conference Program

A Framework for Evaluating Change within an Advocacy Ecosystem, Explored Through Four Case Studies (Saturday, October 18)

As participants in a panel discussion, Kat Athanasiades and Johanna Morariu will be presenting on a case study of the Missouri Health Foundation. Using the Framework for Evaluating Advocacy Field Building, Innovation Network and the Center for Evaluation Innovation are currently gauging how MFH shapes this field through its grantmaking. A discussion of data collection activities will give the audience ideas about how to evaluate these dimensions, lessons learned from the process, and what has been revealed through the evaluation of Skills & Resources and Adaptive Capacity in the field. As participants in a panel discussion, Kat Athanasiades and Johanna Morariu will be presenting on a case study of the Missouri Health Foundation. Using the Framework for Evaluating Advocacy Field Building, Innovation Network and the Center for Evaluation Innovation are currently gauging how MFH shapes this field through its grantmaking. A discussion of data collection activities will give the audience ideas about how to evaluate these dimensions, lessons learned from the process, and what has been revealed through the evaluation of Skills & Resources and Adaptive Capacity in the field.

- Date: Saturday, October 18, 2014

- Time: 8:00-9:30 AM

- Location: Room 703, Hyatt Regency Denver at Colorado Convention Center (650 15th St, Denver, CO 80202).

- Session Number: 599

Conference Program

p2i Week: Message, Design and Delivery (September 5, 2014)

by Johanna Morariu and Ann K. Emery

Want to rock your next webinar? Take a look at this blog post on AEA365, written by Johanna Morariu and Ann K. Emery. They've adapted p2i's preparation, design and delivery strategies for webinars and have created several strategies of their own.

Johanna and Ann also discuss how you can structure your physical space so you are able to deliver your best webinar yet!

Data Viz Tips for Emerging Practitioners in Philanthropy (June 6, 2014)

by Johanna Morariu and Ann K. Emery

Interested in learning how dataviz can get your colleagues to pay attention to your organization's most important data? Take a look at the data visualization materials that Johanna Morariu and Ann K. Emery presented at the Emerging Practitioners in Philanthropy 2014 National Conference. Are you just getting started with dataviz? Check out the list of All-Around Great Visualization tools that Johanna and Ann spoke about during their presentation.

Handout | Slides

Evaluation Essentials for Nonprofits: Terms, Tips & Trends (June 2, 2014)

by Johanna Morariu and Ann K. Emery

Interested in using a logic model or evaluation plan template for your own nonprofit work? Ann K. Emery and Johanna Morariu led an Evaluation 101-level session for the Young Nonprofit Professionals Network on June 2 in Washington, DC.

Slides | MindMap | Logic Model Template

DataViz! Tools, Tips, and How-Tos for Visualizing Your Data (March 13, 2014)

by Johanna Morariu and Ann K. Emery

Presentation at the Nonprofit Technology Conference in Washington, DC

Memos and metrics, emails and texts, newsletters and reports: is your nonprofit suffering from information overload? We consume 34 gigabytes, or 100,500 words, of information every day. Our brains are overwhelmed and struggling to keep up. Data visualization—or dataviz—is one of your nonprofit’s strongest weapons against information overload. Ann Emery, Andrew Means, and Johanna Morariu led “DataViz! Tips, Tools, and How-Tos for Visualizing Your Data” at the Nonprofit Technology Conference in March 2014.

Blog Post Preview | Description | Slides | Resource Handout

Evaluation Blogging: Improve Your Practice, Share Your Expertise, and Strengthen Your Network (October 19, 2013)

by Ann Emery (Innovation Network), Susan Kistler (iMeasure Media), Sheila B. Robinson (Greece Central School District), and Chris Lysy (Westat)

Washington, DC

Want to start blogging about evaluation, but not sure where to start? Started, but want to know what to expect (or what to do next, or how to keep it going)? Ready to take your independent consulting practice to the next level? Or just want to have fun with a new way of communicating with fellow evaluators? In this Think Tank session, four experienced bloggers shared strategies for success and addressed potential concerns.

Description | Slides

How to Climb the R Learning Curve Without Falling Off the Cliff: Advice From Novice, Intermediate, and Advanced R Users (October 19, 2013)

by Tony Fujs (Latin American Youth Center) and Will Fenn and Ann Emery (Innovation Network)

Washington, DC

R is hotter than ever in the evaluation field as evaluators are looking for ways to improve their data management, analysis, and visualizations. First-time R users are asking themselves, Is R right for my evaluation work? Where do I start if I want to learn R? How long will it take to learn R? Evaluators without programming experience are often frustrated by R's steep learning curve. These novice R users are left wondering, How can I climb the R learning curve without falling off the cliff? To address these common questions, we assembled a panel of beginner, intermediate, and advanced R users. Panelists shared real world examples of how we've used R to create data visualizations that were previously impossible for evaluators who don't have access to expensive software packages. Panelists shared their experiences learning R and will highlight the tips, tricks, and resources needed to transform beginner R users into R superstars and speakRs.

Description | Handout

Seeing the Forest (Beyond the Trees): Learning Across the Experiences of Seven Advocacy Evaluators (October 19, 2013)

by Johanna Morariu (Innovation Network), Jara Dean-Coffey (jdcPartnerships), Tom Kelly (Hawai`i Community Foundation), Claire Hutchings (Oxfam Great Britain), David Devlin-Foltz (The Aspen Institute), Robin Kane (RK Evaluation & Strategies LLC), Jared Raynor (TCC Group), and Anne Gienapp (Organizational Research Services)

Washington, DC

Advocacy and policy change evaluation continues to evolve and mature--from a fledgling field a few years ago to the flourishing field of today. Evaluators are advancing as well, developing an increasingly robust collective understanding about what works for advocacy evaluation. In this session a diverse group of seven advocacy evaluators explored and synthesized observations drawn from an array of real-world experiences. Panelists spoke to targeted questions, weaving in their wealth of experience and examples. The conversation began with this question: "What have you learned from your advocacy evaluation experience?" From there, panelists delved into the range of cases they represent. The session identified commonalities among these cases as well as contradictions/inconsistencies to move toward a field-level understanding. Presenters also used graphic recording methods to organize and report out on session themes.

Description | Slides

The Conference is Over, Now What? Professional Development for Novice Evaluators (October 18, 2013)

by Ann Emery

Washington, DC

Are you a recent graduate or novice evaluator? If so, cheers to your first few years in the evaluation field! Your professional development is just beginning. Adding skills to your evaluation toolbox early will help you conduct better evaluations (and, will make you an attractive candidate with plenty of employment options!) Your days of reading textbooks are mostly behind you, so you'll need to find new avenues for increasing your skills and staying current. But, sometimes it's hard to know "where to look" to further your own professional development. This roundtable explored a dozen professional development resources for recent graduates and novice evaluators.

Description | Handout

What is Harder than Building Public Will? Evaluating Whether it Worked! (October 18, 2013)

by Johanna Morariu (Innovation Network), Jennifer Messenger Heilbronner (Metropolitan Group), Doug Root (Heinz Endowments), Scott Downes (The Colorado Trust), Jewlya Lynn (Spark Policy Institute), and Phillip Chung (The Colorado Trust)

Washington, DC

One of the more challenging outcomes to measure in the field of advocacy evaluation is the development of public will around a broadly defined policy issue. Yet, foundations are increasingly recognizing that public will is a precondition to achieving their policy aims and lasting social change. Public will building interventions often rely on telephone polling to develop a baseline and then later to see changes as a result of their interventions, but public will building is a slow process and repeated polling in the short term can lead to disappointing results. What can evaluation offer in the meantime? The panelists described their different methods of evaluation for public will building, from formative evaluation to guide strategy development to monitoring approaches and summative evaluation. They shared both the methods they used and the challenges they faced, with recommendations to evaluators working in this complex arena.

Description

5 Evaluation Lessons From a Recovering Program Officer (October 17, 2013)

by Will Fenn

Washington, DC

As a profession, evaluators must constantly demonstrate the value of their work to remain relevant. Each evaluation represents a significant investment in resources that many argue could be used to provide more programming. A primary concern of maintaining the value proposition of the evaluation field is ensuring that evaluations remain manageable and useful to all participants. The Ignite session offered five succinct lessons from a former foundation Program Officer that can be applied across program types to help nonprofits and foundations improve evaluation coordination and use. The session focused on improving the practice of evaluation by recommending a cooperative approach to build evaluation capacity in nonprofits and to improve practice in the field.

Description | Slides | Recording

Assessing Capacity for Community Change Efforts: Learnings From an Adaptive Initiative (October 17, 2013)

by Kat Athanasiades and Veena Pankaj (Innovation Network) and Deanna Van Hersh (Kansas Health Foundation)

Washington, DC

Should community change efforts be focused on funding coalitions or funding a flexible group of community leaders? The Kansas Health Foundation has embraced a four-pronged community change model that targets community leaders as key agents of change within each of their funded communities. Innovation Network, the evaluation partner for the Kansas Health Foundation's Healthy Communities Initiative, developed and deployed an assessment tool designed to contribute to the assessment of leadership capacity in effecting community change. Presenters shared lessons learned about developing and deploying a capacity assessment tool as well as what these tools can - and cannot - tell you about a coalition's capacity in conducting community change work.

Description | Slides

Excel Elbow Grease: How to Fool Excel into Making (Pretty Much) Any Type of Chart You Want (October 17, 2013)

by Ann Emery

Washington, DC

Tired of using the same old pie charts, bar charts, and line charts in Excel to communicate your evaluation results? Don't have expensive data visualization software? Can't afford to hire a graphic designer to transform your default Excel charts into polished masterpieces? In this 45-minute demonstration, Ann Emery showed attendees how to leverage 'Excel elbow grease' to communicate data effectively in Microsoft Excel.

Description | Slides

How Can We Evaluate Change Within an Advocacy Ecosystem? Developing an Evaluation Framework for Field Building (October 17, 2013)

by Johanna Morariu (Innovation Network, Tanya Beer (Center for Evaluation Innovation), Jewlya Lynn (Spark Policy Institute), Gigi Barsoum (Barsoum Consulting), and Robin Kane (RK Evaluation & Strategies LLC)

Washington, DC

An increasing number of foundations have ventured into advocacy funding over the past decade. While many design their advocacy grantmaking to accomplish specific policy goals, a growing number are designing strategies that aim to influence the power and composition of an ecosystem or field of advocates to shape the policy agenda, thereby equipping the field to make progress on a variety of policy targets over the long term. In February 2013, the Center for Evaluation Innovation (CEI) convened a group of funders to explore their strategies for building advocacy fields and to identify relevant outcomes and measurement strategies. Building on this work, CEI will briefly present the results from the convening and participants will work in small groups focused on one of five field building dimensions: composition, infrastructure, adaptive capacity, connectivity, and framing. The result will be a draft framework for evaluating field building in advocacy.

Description

Performance Management and Evaluation: Two Sides of the Same Coin (October 16, 2013)

by Isaac Castillo (DC Promise Neighborhoods Initiative) and Ann Emery (Innovation Network)

Washington, DC

Performance management and evaluation-what's the difference? With an increasing emphasis on measurement and impact, service providers and their funders are pushing for increasingly sophisticated evaluation approaches such as experimental and quasi-experimental designs. However, experimental methods are rarely appropriate, feasible, or cost-effective for the majority of organizations and service providers. In contrast, performance management-the ongoing process of collecting and analyzing information to monitor program or organizational performance-is something that every organization could, and should, do on a regular basis. Isaac Castillo and Ann Emery introduced the key differences between internal performance management processes and formal external evaluations. These terms are often confused and conflated because both approaches utilize many of the same techniques. However, their philosophies, timing, and purposes vary.

Description | Slides | Recording

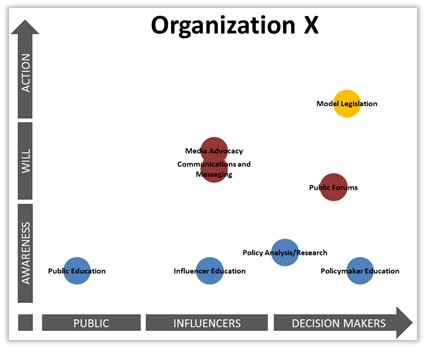

Understanding the Public Policy Landscape: Lessons From a Retrospective Evaluation (October 16, 2013)

by Veena Pankaj and Kat Athanasiades

Washington, DC

This presentation discussed how the Framework for Public Policy Advocacy was used in a retrospective evaluation of a large scale philanthropic public policy campaign. The framework helped to identify and plot grantee strategies across two dimensions - target audience(s) and desired outcomes. Using bubble charts to illustrate the strategic focus of each grantee, the evaluation team was able to recognize trends among the grantee partners, identify gaps and provide an aggregate overview of the types of strategies being supported. Veena Pankaj and Kat Athanasiades highlighted how the framework was used in selecting and developing appropriate data collection methodologies based on the strategic focus of the grantees. These methodologies were created to specifically correspond to the outcome areas and target audience(s) highlighted by the framework. Finally, the presenters reflected on lessons learns and share insights for improving advocacy evaluation at the portfolio level.

Description | Slides | Handout

Facilitation: An Essential Ingredient in Evaluation Practice (October 16, 2013)

by Veena Pankaj (Innovation Network) with Rita Fierro (Fierro Consulting), Alissa Schwartz (Solid Fire Consulting), Tessie Catsambas (EnCompass LLC), Kataraina Pipi (FEM 2006 Ltd.), Patricia Jessup (InSites), Jean King (University of Minnesota), Tobi Mae Lippin (New Perspectives Inc.), Kristin Bradley-Bull (New Perspectives Inc.), Rosalie Torres (Torres Consulting Group LLC), Maria Scordialos (Living Wholeness Institute), Vanessa Reid (Living Wholeness Institute), Laurel Stevahn (Seattle University), and Michele Tarsilla (Social Impact Inc.)

Washington, DC

There are many intersections between evaluation and facilitation. In evaluation, facilitation can play a role in helping groups map a theory of change, in data collection through focus groups or other dialogues, in analysis by involving stakeholders in making meaning of the findings. While each of these steps is described in evaluation texts and the literature, less attention is given to describing facilitation approaches and techniques. Even less is written about evaluating facilitation practices, which are integral to organizational development and collaborative decision-making. Choices for facilitation methods to implement depend on the client, context, and priorities of the work, as well as the practitioner's skill, confidence, and philosophy. This think tank brought together a group of evaluators and facilitators collaborating on a publication about these complementary practices. We hope to spark a deeper conversation and reflections among participants about the role of facilitation in evaluation and of evaluation in facilitation.

Description

Coalition Assessment: Case Study and Frequently Asked Questions (September 11, 2013)

by Veena Pankaj, Johanna Morariu, Kat Athanasiades, and Ann Emery

Washington, DC

We shared information about our Coalition Assessment Tool with an organization in Washington, DC. We discussed the tool's development, which involved an extensive literature review, vetting, and pilot testing over a 10-month period. We also shared the seven sections of the tool and how these sections can be modified and adapted for different coalitions.

Slides

.jpg)

Evaluation: Finding a Common Ground (July 30, 2013)

by Kat Athanasiades and Veena Pankaj

Milwaukee, WI

While common frameworks and approaches for evaluation have been developed across multiple fields, regional associations for grantmakers have, for the most part, been left out of this dialogue.The purpose of this session is to highlight the common threads that distinguish regional associations from other organizational genres in the social sector. Regional associations promote effectiveness in philanthropy by providing grantmakers with opportunities to engage with others, share ideas, and generate best practices that support both the individual and collective impact of philanthropy.

While each regional association employs its own unique mix of strategies to achieve impact, most are working towards a shared goal of promoting effectiveness within philanthropy. In this session, Innovation Network engaged participants in a group dialogue to draw out high level strategies and desired outcomes that are common across most regional associations. The resulting logic model is a tool that regional associations can use as a starting point when planning for evaluation.

Slides | Conference Agenda

Ask an Evaluator (June 12 & 26, 2013)

Washington, DC

These Ask an Evaluator events were for nonprofit leaders seeking to better understand evaluation basics and connect with each other. We offered group instruction and one-on-one guidance about logic models, evaluation plans, participatory analysis, and the evaluation lifecycle.

Assessing the Capacity of Community Coalitions to Advocate for Change (May 22, 2013)

by Veena Pankaj, Johanna Morariu, Kat Athanasiades, and Ann Emery

Washington, DC

Research has shown that high-capacity coalitions are more successful in effecting community change. While a number of coalition assessment tools have been developed, documentation is scarce regarding how they are implemented, how the results are used, and whether they are predictive of coalition success in collaborative community change efforts. This session will focus on a coalition assessment tool that was designed by Innovation Network to assess changes in coalition capacity over time. Developed for a health promotion initiative of a major health foundation, this tool is designed to assess coalition progress in eight key areas across twelve different community coalitions, over the course of a three year initiative. Presenters will share lessons learned from the first year of the initiative about developing and deploying the assessment tool, as well as what these tools can—and can’t—tell you about a coalition’s capacity in conducting community change work. In addition presenters will share how information collected from this assessment can be communicated back to the coalitions using data visualization approaches to effectively communicate the data.

Dataviz! Or, How to Win at Communication and Influence People (May 17, 2013)

by Johanna Morariu

Los Angeles, CA

Are you intrigued by data and information visualization—dataviz—and how it could improve your communication strategy? Are you interested in the range of dataviz options, but unsure which is right for you? Or are you maybe even drowning in data and looking for someone to throw you a life-saving suggestion for tools to transform your data into a message? Through lively sharing and discussion this session will cover a range of topics related to nonprofit engagement with data and information visualization, including: What is Data/Information Visualization? Why Should My Nonprofit Use Data/Information Visualization? And How Do I Get Started with Data/Information Visualization?

Slides | Handout

Beyond Boring Bar Charts (April 22, 2013)

by Ann Emery

Minneapolis, MN

Tired of using the same old pie charts, bar charts, and line charts to tell your nonprofit’s story? Don’t have expensive data visualization software? Can’t afford to hire a graphic designer to transform your default Excel charts into polished masterpieces? In this 5-minute Ignite presentation at the Nonprofit Technology Conference, Ann Emery showed nonprofit leaders how to transform their default Excel charts by simply leveraging a little Excel elbow grease.

Slides

Evaluation as a Tool for Creating and Leading a Results-Based Learning Culture (April 5, 2013)

by Johanna Morariu and Will Fenn

Chicago, IL

Johanna Morariu and Will Fenn discussed effective evaluation initiatives, highlighting useful tools such as those available from Innovation Network’s Point K.

Conference booklet | Slides

State of Evaluation: Evaluation Practice and Capacity in the Nonprofit Sector (March 22, 2013)

by Johanna Morariu and Ann Emery

Washington, DC

Slides | State of Evaluation 2012

The State of Evaluation in the Social Sector (March 21, 2013)

by Johanna Morariu and Will Fenn

Washington, DC

Measurement, evaluation, and learning are hotter than ever in the social sector. Foundations and nonprofits are focused on answering the question What difference are we making? And the field of evaluation has advanced in promising ways, developing meaningful evaluation approaches to better fit the latest philanthropic and nonprofit strategies. In this session Johanna Morariu and Will Fenn shared their observations regarding the state of evaluation in the social sector drawing on their experience consulting with funders and nonprofit organizations and Innovation Network’s research publication State of Evaluation 2012: Evaluation Practice and Capacity in the Nonprofit Sector.

Registration | Evaluation concepts mindmap

State of Evaluation 2012: Evaluation Practice and Capacity in the Nonprofit Sector (February 25, 2013)

by Johanna Morariu, Kat Athanasiades, and Ann Emery

Washington, DC

Nonprofits hear a lot of talk about evaluation these days—metrics and measurements, indicators and impact, efficiency and effectiveness. Everyone seems to want evaluation results—from nonprofit staff themselves to donors to board members. But there’s a gap in the conversation: What are nonprofits really doing to evaluate their work? How are they using evaluation results? Do nonprofit staff have the knowledge, skills, and resources they need to carry out effective evaluation?

Description | Slides | State of Evaluation 2012

Creating and Automating Dashboards Using Microsoft Word and Excel (January 15, 2013)

by Agata Jose-Ivanina and Ann Emery

Data dashboards put stakeholders in the driver’s seat by providing instant, understandable evaluation results. In this 3-hour webinar, Agata Jose-Ivanina and Ann Emery presented a process that allows you to create automated dashboards that are valuable for programs and save time for evaluators. The webinar covered principles of dashboard design using MS Word and Excel -- tools that most users already have.

Return to top

Place-Based Evaluation Community Webinar: Assessing Community Capacity (November 13, 2012)

by Veena Pankaj and Kat Athanasiades

Veena Pankaj and Kat Athanasiades gave a webinar about assessing coalition capapcity with Innovation Network's Coalition Assessment Tool.

Twitter for Evaluators (November 7, 2012)

by Ann Emery and Johanna Morariu

Washington, DC

Ann Emery and Johanna Morariu led a class and discussion on how evaluators can use Twitter.

State of the Field: Updated, Longitudinal Findings about Nonprofit and Philanthropic Evaluation Practices and Capacities (October 27, 2012)

by Johanna Morariu

Minneapolis, MN

The State of Evaluation project provides valuable insight to all those who work in and with the nonprofit sector. The project is designed to collect longitudinal data to document evaluation trends in the U.S. nonprofit sector, including how nonprofits staff evaluation, how evaluation is funded, why evaluation is undertaken, how evaluation results are used, and much more. This year marks the beginning of longitudinal data and analysis, drawing from the first iteration of the project in 2010.

Description | Slides

Why I Love Internal Evaluation (October 27, 2012)

by Ann Emery

Minneapolis, MN

Description | Slides | Recording

Creating and Maintaining a Learning Culture in a Multi-Service Nonprofit Organization (October 26, 2012)

by Ann Emery

Minneapolis, MN

Description

Data Placemats: A DataViz Technique to Improve Stakeholder Understanding of Evaluation Results (October 25, 2012)

by Veena Pankaj

Minneapolis, MN

Slides

Measuring Influence Strategies in Philanthropy (October 25, 2012)

by Johanna Morariu

Minneapolis, MN

Description

Top 10 Benefits of Connecting With Your AEA Affiliate (October 25, 2012)

by Ann Emery

Minneapolis, MN

Description | Slides | Recording

Building Nonprofit Capacity to Evaluate, Learn and Grow Impact (September 26, 2012)

by Johanna Morariu and Veena Pankaj

Appleton, WI

Innovation Network Directors Johanna Morariu and Veena Pankaj facilitated a training for grantmakers about building evaluation capacity—within their foundations and with grantees.

Description

Building Nonprofit Capacity to Evaluate, Learn and Grow Impact (September 13, 2012)

by Johanna Morariu and Veena Pankaj

Philadelphia, PA

Innovation Network Directors Johanna Morariu and Veena Pankaj facilitated a training for grantmakers about building evaluation capacity—within their foundations and with grantees.

Description

Building Nonprofit Capacity to Evaluate, Learn and Grow Impact (August 15, 2012)

by Johanna Morariu and Veena Pankaj

St. Louis, MO

Innovation Network Directors Johanna Morariu and Veena Pankaj facilitated a training for grantmakers about building evaluation capacity—within their foundations and with grantees.

Description

Building Nonprofit Capacity to Evaluate, Learn and Grow Impact (July 17, 2012)

by Johanna Morariu and Veena Pankaj

Florida

Innovation Network Directors Johanna Morariu and Veena Pankaj facilitated a training for grantmakers about building evaluation capacity—within their foundations and with grantees.

Evaluation Training (June 8, 2012)

by Johanna Morariu and Veena Pankaj

Aurora, IL

Johanna Morariu and Kat Athanasiades facilitated a training focused on evaluation planning, deepening evaluation skills, and building evaluation capacity.

Picturing Your Data is Better Than 1,000 Numbers: Data Visualization Techniques for Social Change (April 4, 2012)

by Johanna Morariu

San Francisco, CA

Innovation Network Director Johanna Morariu joined nonprofit network guru Beth Kanter and Brian Kennedy of Children Now at the 2012 Nonprofit Technology Conference to present on a range of topics related to nonprofit engagement with data and information visualization.

Slides and Recording

The Hard Truth About Strategic Learning in Five Minutes (March 14, 2012)

by Ehren Reed

Seattle, WA

Many grantmakers are trying to incorporate strategic learning into their grantmaking, particularly philanthropists who recognize that complex problems require dynamic and transformative solutions. Strategic learning promises that lessons that emerge from evaluation and other data sources will be timely, actionable, and forward-looking, and that strategists will gain insights that help them make their next move in a way that increases their likelihood of success. As a concept, strategic learning is an easy sell. But as a real-life practice, it is much messier and more complicated. Through a fast-paced, innovative storytelling format, 10 presenters, including grantees, program staff and eval |